Azure for AWS professionals - Storage - Azure - 02 Data Transfer Options

@20aman Sep 01, 2019Note that this post is a part of the series. You can view all posts in this series here: Azure for AWS professionals - Index

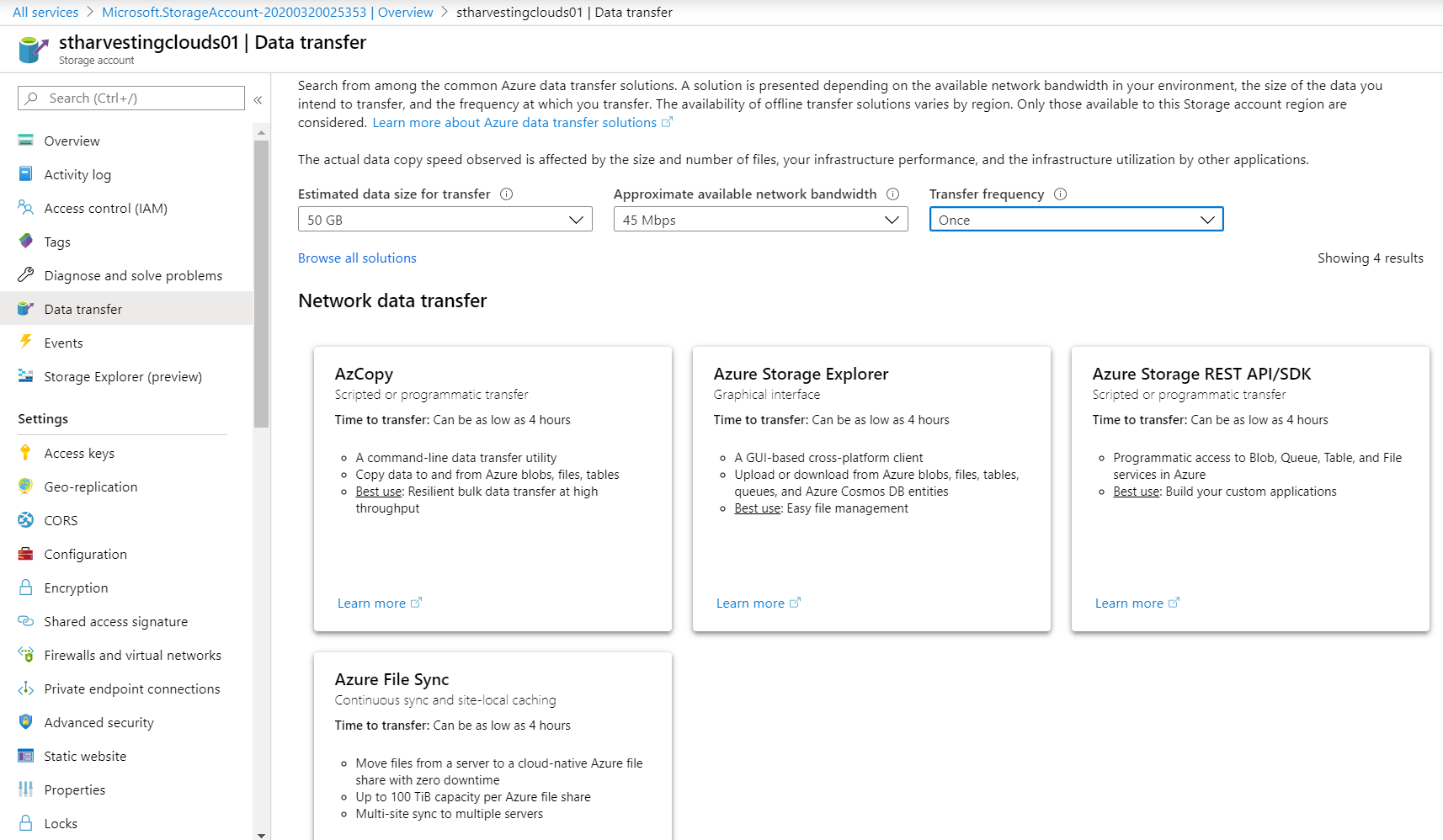

When you have a Storage account in Microsoft Azure, you want to transfer data to the blob storage to consume the storage. The initial data transfer can be huge and you want to optimize this data transfer.

Navigate to your Storage account and click on "Data transfer" setting to view all the options that you have. Use this section to define your estimated data size for transfer, approximate estimated network bandwidth and transfer frequency. Based on your selection this wizard will show you relevant options that will best suit you to transfer your data.

Let look at these options closely. The options you have are discussed below.

1. AzCopy

This is a command-line utility that allows the scripted or programmatic transfer.

- A command-line data transfer utility

- Copy data to and from Azure blobs, files, tables

- Best use: Resilient bulk data transfer at high throughput

You can learn more about this service here: AzCopy

2. Azure PowerShell or Azure CLI

These are actually two different options for scripted or programmatic transfer.

- A command-line interface to manage Azure Resources

- Best use: Build scripts to manage Azure resources and small data sets

- Azure PowerShell installs on Windows or use in browser with Azure Cloud Shell

- Azure CLI installs on macOS, Linux, Windows or use in browser with Azure Cloud Shell

You can learn more about Azure PowerShell here: Azure PowerShell You can learn more about Azure CLI here: Azure CLI

3. Directly from the Azure Portal

This is the easiest option from all the options as using this you are directly uploading the data from the Azure portal.

- A web-based interface

- Explore files and upload new files one at a time

- Best use: If you don’t want to install tools or issue commands

You can learn more about this service here: Data transfer for small datasets with low to moderate network bandwidth

4. Azure Data Factory

Here you are using managed data pipelines and have the most control over your data coming into the Azure storage account.

- A hybrid data integration service with enterprise-grade security

- Create, schedule, manage data integration at scale

- Best use: Build recurring data movement pipelines

You can learn more about this service here: Azure Data Factory

5. Azure Storage Explorer

Another Graphical interface (GUI) option provided through a standalone software utility that you can install on your laptop or desktop.

- A GUI-based cross-platform client

- Upload or download from Azure blobs, files, tables, queues, and Azure Cosmos DB entities

- Best use: Easy file management

You can learn more about this service here: Azure Storage Explorer

6. Azure Storage REST API/SDK

This option is directly leveraging the underlying APIs/SDK to transfer the data. This is a highly customizable option. You can even build your own utilities using this option. This option also allows for scripted or programmatic transfer by writing your own custom code to transfer the data.

- Programmatic access to Blob, Queue, Table, and File services in Azure

- Best use: Build your custom applications

You can learn more about this service here: Azure Storage REST API Reference

7. Azure File Sync

This is the continuous sync and site-local caching option.

- Move files from a server to a cloud-native Azure file share with zero downtime

- Up to 100 TiB capacity per Azure file share

- Multi-site sync to multiple servers

You can learn more about this service here: Planning for an Azure File Sync deployment

8. Azure Data Box Edge and Data Box Gateway

Azure Data Box Edge is an on-premises device.

- On-premises Microsoft physical network device. It supports SMB/NFS

- Edge compute processes data in local cache before fast, low bandwidth usage transfer to Azure

- Best use: Preprocess data, inference Azure ML, continuous ingestion, incremental transfer

Whereas, Azure Data Box Gateway is a virtual device, also sitting on-premises.

- On-premises virtual network device in your hypervisor

- Local cache-based fast, low bandwidth usage transfer to Azure over SMB/NFS

- Best use: Continuous ingestion, cloud archival, incremental transfer

You can learn more about this service here: Azure Stack Edge

9. Azure Data Box and Data Box Disk

Azure Data Box is the offline transfer via a device.

Time to transfer: Typically 80 TB/order in 10-20 days

- A 100 TB (80 TB usable) rugged, encrypted device shipped by Microsoft

- Offline transfer to Azure Files, blobs, managed disks, over SMB/NFS/REST

- Best use: Initial/recurring bulk data transfer for medium to large data sets

Azure Data Box Disk is very similar to Data Box. it is also offline transfer via a device (in this case a disk).

Time to transfer: Typically 35 TB/order in 5-10 days

- A 40 TB (35 TB usable) set of up to five, encrypted, 8 TB SSDs shipped by Microsoft

- Mount USB 3.0/SATA disks as drives for transfer to Azure Files, blobs, managed disks

- Best use: Initial/recurring bulk transfer for small to medium data sets

You can learn more about Azure Data box service here: Azure Data Box

10. Azure Import/Export

This is another offline transfer device option. Key features are:

- Ship up to 10 of your own disks to transfer data to and from Azure

- Mount disks as drives for offline transfer to Azure Files, Blobs

- Best use: Initial bulk transfer for small to medium data sets

You can learn more about this service here: Azure Import/Export